-

Amplifire’s CEO Reflects on 2020: What This Year Has Taught Us

Bob Burgin

Amplifire CEO2020 – a challenging year for everyone. Enough said?

Well, maybe not. I have to say that it has been truly inspiring to witness our clients’ heroic response during this trying time. Health systems’ heroic and dedicated staff took significant personal risk to care for us. T-Mobile kept lines open while delivering superior customer care. Our higher education clients helped over one million students succeed in their efforts to learn remotely. Professionals across the country found the motivation to earn certifications as their career paths were at risk. More than anything else, we thank you. Your tenacity during hard times is inspiring.

As for Amplifire, well, I think it has been a good year. You see, the world never entirely goes back to what it was before a time like this. Something we have all known for years but struggled to create just advanced 10+ years in only nine months. It’s the notion that sophisticated adaptive online training done right significantly outperforms classroom training.

I wouldn’t wish this year on anyone, but sometimes out of terrible events comes powerful change. I suspect we will get on airplanes for business a little less often now that we have broken the self-consciousness barriers and learned to look into each others’ eyes on a web call. And we have a new pattern of staying connected with loved ones across the country and the world.

And I similarly believe we will not go back to putting people in classrooms and teaching to the lowest proficiency in the room. This is the promise of adaptivity—people get instruction tailored to their location and circumstances on the path to mastery. Some go fast, others take more time to absorb information, but everyone can become proficient.

In 2020, we expanded our client relationships and added new clients. And as the radical move to online adaptive training hit hard, the demand for our advanced capabilities grew, and we added a host of new reseller partners fully embracing this new way of learning.

It was a challenging year, without a doubt. In April, we were taking pay cuts to ensure our ability to keep our no-layoff promise to our employees during COVID. Like everyone, we had no idea what the future would hold.

We will continue our focus on advancing the art and science of Knowledge Engineering to drive improved performance. It’s never been truer than this present moment that we live and work in a knowledge economy where human flourishing derives from the information stored in our minds as long-term memory.

It turns out that the most powerful, complicated, amazing computing device is not in some university basement. Not calculating rocket trajectories at NASA or finding obscure info for Google. Although it designed all the computing devices ever used.

It’s the human mind.

And finding ways to load knowledge into the human mind, well, that’s our obsession.

As 2020 draws to a close, we wish you our best and share our hopes for an exhilarating 2021.

And a very special thank you to our team, who never blinked.

Bob Burgin

CEO -

Improving Pediatric Sepsis Protocols

Matthew J. Hays, PhD

Senior Director of Research and AnalyticsSepsis is the leading cause of death in children worldwide, with mortality rates between 4% and 20% in different pediatric populations and settings. When sepsis has become severe, death becomes 8% more likely for every hour that antibiotics are delayed. Timely diagnosis and treatment are the difference between life and death.

In response, Children’s Hospital Colorado (CHCO) has spearheaded a sepsis quality improvement initiative focused on improving early detection and effective management of sepsis. The goal was to use training to support clinicians’ quick, confident, and correct decision-making when pediatric sepsis is or should be suspected. The details of this endeavor were published in the latest edition of Pediatric Quality & Safety.

In the article, CHCO physicians Justin Lockwood and collaborators describe the development of a new sepsis course. The course integrates current national guidelines as well as best practices developed at CHCO to improve the recognition and management of pediatric sepsis. This course was jointly developed by sepsis experts at CHCO and the Amplifire Healthcare Alliance (AHA), a federation of top health systems working together to improve care and reduce harm.

The course was then deployed through Amplifire’s adaptive e-learning platform to frontline personnel. CHCO chose to partner with Amplifire on this effort for two reasons. First, Amplifire training has been shown to improve patient care and clinical outcomes. Second, Amplifire is built to detect when providers are sure that their knowledge is accurate or their decisions are sound—but they’re actually wrong. This confidently held misinformation (CHM) directly leads to missed diagnoses and even patient harm. “Amplifire introduced us to the concept of confidently held misinformation,” said Dr. Dan Hyman, CHCO’s Chief Medical and Patient Safety Officer.

Adaptive E-Learning

Amplifire’s training software is able to have clinical impacts because it is designed entirely around how we learn and remember. The Amplifire experience begins with questions about the learning objectives. Amplifire takes this question-first approach because well-designed questions can prepare the brain to learn. Many of the questions are asked in a multiple-choice format, which has been shown to improve the transfer of training beyond a single item’s learning objective.

When responding to questions in Amplifire, learners indicate the answer(s) they believe to be correct as well as their confidence in their decision, a patented process that improves long term memory for the material.

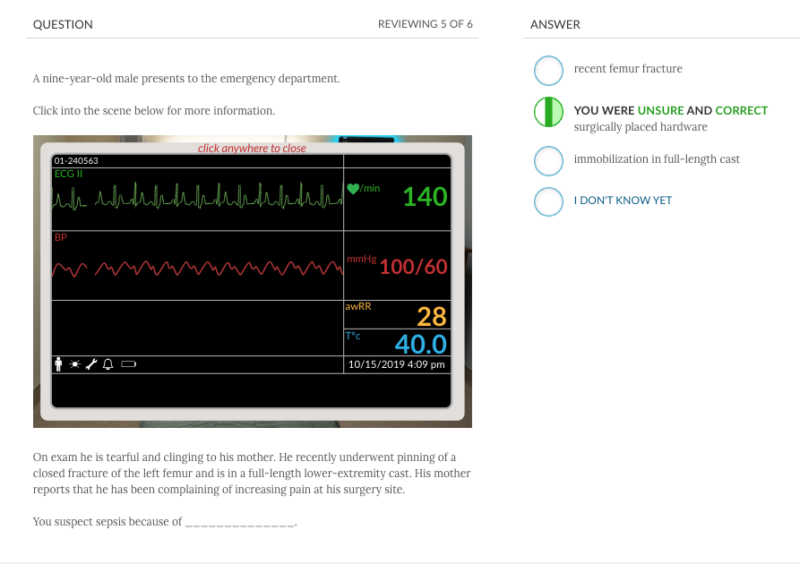

A sample question in CHCO’s Amplifire pediatric sepsis training module Learners receive immediate feedback on whether their response was accurate and how their confidence was calibrated (e.g., “you were partially sure and one of your answers was correct.”). When a learner confidently answers a question correctly, corrective feedback is withheld; learners’ time is better spent on more productive activities. On the other hand, low-confidence correct answers and incorrect answers (especially in cases of CHM) are accompanied by delayed corrective feedback, which has been shown to differentially enhance learning.

The result is training that has unprecedented impacts on outcomes. One AHA member reported a 32% reduction in CAUTI when nurses were trained with Amplifire. In another study, Amped nurses reduced CLABSI by 48%. Current investigations suggest that Amplifire helps providers reduce sepsis mortality rates, prevent pressure injuries and patient falls, and reduce surgical-site and C. diff infections.

Findings and Implications

At the time of publication, a total of 1,129 CHCO providers had used Amplifire; over 300 more have since completed training. Amplifire’s flexible, modular approach to content enabled CHCO to train RNs, MDs, and APPs in non-ICU inpatient and ED settings.

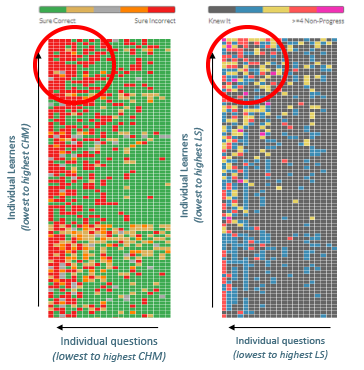

The average learner took 27 minutes to complete the training. Some of the component items were basic tests of knowledge. Others presented interactive cases and scenarios that required learners to judge the best course of action. In all cases, the Amplifire platform tracked CHM as well as when learners struggled to overcome a stubborn misconception. This information can be reviewed visually in the heatmaps below.

Amplifire heatmaps showing items in columns and learners in rows. The left heatmaps shows the distribution of CHM; the right shows where providers struggled to learn. Within each heatmap, the learners are sorted top to bottom, from greatest to least CHM/Struggle; the items are sorted left to right in the same way. The top left of each heatmap (circled) represents the learner-item combinations with the greatest likelihood to cause issues in the prevention and management of pediatric sepsis at CHCO. Amplifire’s heatmaps can depict different aggregations of learners (e.g., by manager, department, shift) and content (e.g., by topic). Operational issues can quickly emerge; perhaps everyone who works for Chris shares the same misconception, or all nurses in a particular wing struggle with a particular procedure. Administrators can then evaluate and remedy these problems. According to Dr. Hyman, the heatmaps pinpoint exactly where “and how much work we have yet to do.”

Dr. Lockwood summarized Amplifire’s dual capabilities by saying that “the use of confidence-weighted testing allowed us to provide needed education to frontline clinicians while simultaneously identifying areas of risk for ongoing sepsis improvement. In this way, the impact and sustainability of our educational intervention was improved.”

Efforts are currently underway at CHCO to use Amplifire in other patient-care quality improvement initiatives. For a full list of available Amplifire courses, click here. To see their impacts on clinical and performance outcomes, see Amplifire’s case studies. To learn more about the Amplifire Healthcare Alliance, which includes systems like CHCO, Partners Health, Intermountain Health, Banner Health, and more, click here.

Reference: “Is this Sepsis?” Education: Leveraging Confidently Held Misinformation and Learner Struggle

-

AI and The Future of Learning

Nick Hjort

SVP of Product and DevelopmentFor many people the term Artificial Intelligence (AI) conjures frightening visions of systems like Skynet from Terminator or HAL from 2001, which may lead to a valid level of apprehension of any technology that claims to employ AI. This fear may increase when AI is applied to something as personal as learning. In fact, great minds like Elon Musk and Stephen Hawking have warned us against the potential dangers of Artificial Intelligence.

The truth is that great benefit can be found in online learning with the lightest application of Artificial Intelligence. Merriam Webster defines Artificial Intelligence as “a branch of computer science dealing with the simulation of intelligent behavior in computers.” Intelligent behavior can manifest itself in computers in many ways from the overt to the subtle, while still not getting close to the dystopian image of AI from the movies.

When done properly, the utilization of AI can be applied without distracting from the learning experience itself. By creating a sophisticated algorithm, AI can create learning environments that improve learner insights and experience.

Here are just a few ways that you may see AI applied within online learning experiences. They range from the obvious to the behind the scenes application of Artificial Intelligence within learning software.

Personalized Learning

Developing a personalized learning path or a tutor-like experience increases engagement as it makes the experience meaningful. Creating platforms that adjust to only deliver content that learners are not knowledgeable about improves engagement and desirability while also providing instructors with valuable insights. These small nudges and personalizations simulate interpersonal experiences, but cost less and provide ample insight into knowledge gaps.

These personalized learning paths manifest in 2 different ways:

One way is through assignment generation from one learning experience to another. The AI system will make a determination from the results of one learning experience to determine what the learner should do next. Whether that is more exposure to a particular topic because the results show the learner didn’t grasp that topic well enough, or if it’s new material to advance what has already been learned because proficiency in the base topic was shown by the learner.

The other way is personalizing the learning experience while it is happening. An AI system can determine how well a learner is grasping concepts and adjust in real time to what a learner needs. Strategies like branching, skipping topics where mastery is shown early, repeating of topics in other forms where struggle is present, are just a couple of the methods to achieve this personalization. Advantages of personalizing the learning experience are: Time savings for learners who show mastery early, or have a large amount of initial knowledge on the topic. Assurance that mastery has been achieved instead of just time spent by the time learning experience is completed.

Behavior Assistance

Behavior Assistance is a powerful AI tool that can improve attention and offer encouragement or guidance. AI assistance tracks when learners are disengaged, trying to cheat, or kicking ass. When disengaged behavior is detected, AI can step in and change those behaviors. We know that focus and motivation are key behaviors that lead to lasting learning, so the AI will encourage or correct disengaged behavior so that a learner can maximize the benefit from their time spent learning.

An AI nudge could be the difference needed to get learners to realize that the eLearning is just as real and valuable as a face-to-face discussion or performance evaluation. The valuable insight Behavior Assistance provides to the designers and instructors informs them if their performance results are aligning with the behavior. Behavior Assistance indicates if the learning is going to lead to change. It could be the insight that determines if a learner is apathetic or genuinely trying, but really struggling or if they are taking learning seriously and succeeding. This gives trainers and instructors the information to better facilitate targeted interventions and conversations to further improve upon the learner’s knowledge gains and resulting performance.

During the learning experience, behavior assistance can manifest in either a passive or active manor. Passive assistance takes the form of learner messaging that nudges them into the correct behavior. Passive assistance may also go as far as to inform an instructor of the behavior of the learner so that a needed intervention may occur. Active assistance takes more of a commanding role of the experience. The AI may actively force a learner to do more work or repeat a topic when poor behavior is detected. Other actions may be taken such as a forced break from the software to try to get the learner in the correct mindset for learning.

Instructor Assistance

Perhaps one of the most valuable tools AI can provide to improve learning outcomes and truly shape the future of learning is through the reporting. A learner’s behavior and performance can be shown through reports indicating where interventions are needed or even where a shift in our own instruction should occur. A well-written AI can pinpoint where an individual or group; struggles, has uncertainty, where they hold incorrect prior knowledge, or holds dangerous information. From these reports, personalized learning through the platform is provided and instructors can also align their own instruction and coaching to specifically meet the needs of their learners.

The aim for AI in learning should not be to replace an instructor or manager in the overall learning experience. AI should be the first line of analysis to try to assess what is going on with a learner. This will aid an instructor in pinpointing where there time is best spent with a specific learner or in front of an entire class. As with most software, the goal is to optimize time to be sure that the highest level of value is gained from the time spent on a task.

ChatBot

ChatBot is a feature that many learning platforms employ to generate a more interpersonal experience and give learners more autonomy to freely ask questions. In many instances, learners will be more open with a Chatbot than they would in a face-to-face environment because any social hindrances are completely removed. ChatBots can lead to a distracted or frustrated learning experience if not created thoughtfully. Learners can tell when answers are automated and become frustrated if the ChatBot does not deliver satisfactory answers to their questions.

Conclusion

AI is incredibly powerful. It can save organizations the laborious task of analyzing curriculum or results just to determine what’s working and what is not. AI can also save organizations time by automating tedious tasks. Through personalized and data-driven eLearning, less time is needed to cover curriculum thus, organizations can spend their time delivering more individualized and meaningful approaches to training than before. AI is powerful, but only works really well when we keep humanity involved.

Remember, AI is best employed when it is not noticed. It should not be a distraction to learning; rather it should be a natural enhancement to a learning product that improves outcomes and experiences. Behind every insight, every interaction or algorithm created, people are there. AI can make life easier, so more time can be spent building meaningful and productive relationships that evoke greater results.

-

The Beauty of Multiple Choice

It’s now well understood that self-testing is perhaps the world’s most powerful trigger for turning on the mental mechanisms that create long term memory and the ability to recall something in the future. Memory storage, like most brain processes, is a pattern of neurons connected at their synapses by electrochemical processes. Retrieving a memory through self-testing strengthens that pattern better than any other technique.

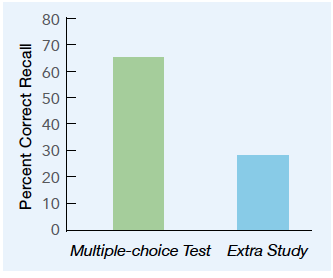

Urban myth would have us believe that multiple choice tests are mostly good for quick grading. But in 2009, researchers proved this myth about multiple choice was wrong. It turns out that…

- Multiple choice tests increase the retention and recall of information.

- They are better than recall tests or essay questions or extra study for building durable memory.

- They do not promote the mental phenomenon of “retrieval induced forgetting.”

Multiple choice is not merely the recognition of a correct answer. It can more accurately be described as recognition combined with recall because the alternative answers must each be contemplated in turn and then selected as right or rejected as wrong. Multiple choice retrieval unleashes powerful effects that increase both retrieval strength and storage strength of the correct answer plus the alternatives. It’s a virtuous circle of memory fortification.

Solving the Problem of Retrieval Induced Forgetting

Research has shown that multiple choice solves a serious problem with other kinds of testing—the problem of retrieval induced forgetting which occurs when the brain is asked to retrieve a memory. For example, I ask you to name the fourth planet from the sun. If you recall that it’s Mars, then the memory of Mars is strengthened as we would imagine, but related memories become weaker—the positions of Jupiter and Venus are suppressed. This is something we would not have imagined until it was shown experimentally.

Think of it this way. The memory of new learning is in a kind of competition with the memory of related, but older learning. To avoid confusion and to help make rapid decisions, animals and people need to instantly know which memory is most up to date. To accomplish this, a mental system evolved to suppresses the strength of older, related information that may compete with the more recent and likely more relevant information. New information causes old information to be forgotten.

“ The results imply that taking a multiple-choice test not only improves one’s ability to recall that information, but also improves one’s ability to recall related information.” — Little & Bjork, UCLA

UCLAEnhancing the Memory of Related Information

Multiple-choice tests do not damage access to related information. Just the opposite, they enhance the retrieval strength of related information. In multiple-choice tests, a learner’s brain is asked to compare and contrast the truth of the competing alternatives. This strengthens the memory trace of those alternatives—quite the opposite of what occurs in the brain during a recall test, where forgetting of the alternatives is encouraged by the suppression of related memory.

Multiple Choice Plus Confidence in Amplifire

Confidence appraisals magnify the superior effects of multiple-choice testing. Thinking about one’s confidence focuses the brain’s resources and attention through activated dopamine circuits. The spotlight of consciousness is brought to bear on the question at hand. With confidence focusing attention on the plausible alternatives of a well constructed multiple-choice question, learning and memory benefit greatly. Students who use Amplifire report that their attention is focused, that learning occurs more rapidly, and that future recall is easier.

-

Leveraging Cognitive Science and Artificial Intelligence to Save Lives

Medical error is the third-leading cause of death in the United States, just behind heart disease and cancer. Implicated in half of these cases of preventable harm, is the confidently held misinformation in the minds of clinicians.

A recent article published by Springer Nature and presented by Amplifire’s Sr. Director of Research and Analytics, Matt Hays, Ph.D, at AIED 2019, shares how an adaptive learning platform harnesses artificial intelligence and the principles of cognitive psychology to find and fix knowledge gaps among clinicians to reduce medical errors.

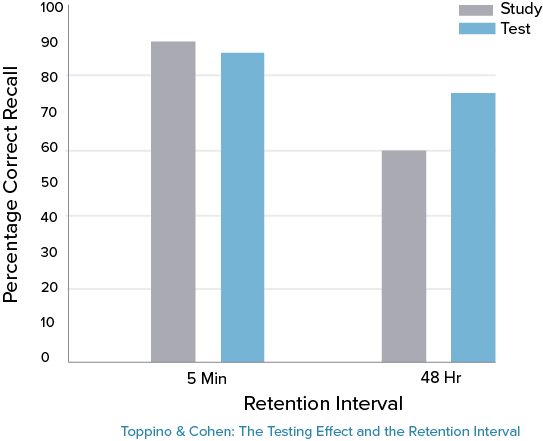

Artificial intelligence (AI) is widely used in education, largely through intelligent tutoring systems (ITSs). AI is the future, but related findings from cognitive psychology and other learning sciences have gained less traction in ITS research and the classroom. One reason may be that some cognitive phenomena are counterintuitive; how learners, teachers, and even researchers think learning should work is not always how it actually works.5 For example, the testing effect is the finding that retrieving information from memory is much more powerful than being re-exposed to the information (e.g., by re-reading; 6). But classrooms in 2019 still rely heavily on watching videos, sitting through lectures, and reading chapters. Even ITSs often use testing exclusively for assessment purposes (although there are exceptions, e.g., 7).

Amplifire’s adaptive learning platform is built on principles of cognitive science that are proven to promote learning and long-term memory retention. The platform uses AI to determine how best to leverage many of those principles in real time.

AI-Directed Cognitive Science

Amplifire optimizes learning by adapting to each individual’s knowledge base. It uses AI to determine if and when learners need additional learning, provide corrective and metacognitive feedback, and guide learners towards mastery.

The platform begins by asking questions in a variety of formats (multiple-choice, select-all, matching, interactive). This approach is beneficial even if the learner couldn’t possibly know the correct answers.8, 9 Attempting to answer questions is perhaps the most powerful way to gain knowledge and skills,6 even if the answer attempts are incorrect. 10

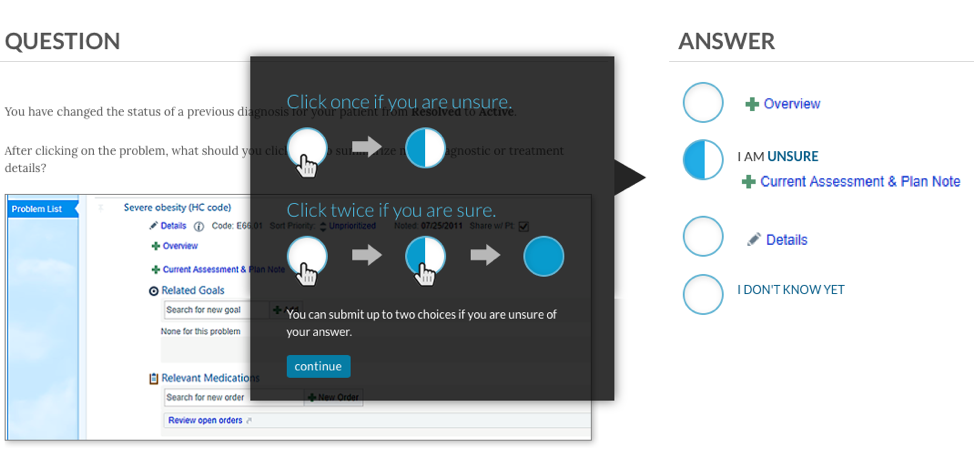

When responding to questions in Amplifire, learners indicate their confidence in their responses, making them consider the question more carefully 11 and improving their memory for the material 12. This cognitive benefit only occurs when answers and confidence are considered simultaneously,13 a process Amplifire has patented. Learners in Amplifire click an answer once to indicate partial confidence or twice to indicate certainty. They can also click “I don’t know yet.”

After submitting a response, learners receive immediate feedback on whether their response was correct. Metacognitive feedback guides learners to understand whether they have been under- or overconfident.14 Later they will receive corrective feedback if they answered incorrectly.

Amplifire’s AI also determines whether and when to provide self-regulatory feedback, which is focused on correcting learner behavior in the platform. For example, a learner might be told to “make sure to read the question carefully” if they answer in less time than it would take to read the question.

Corrective feedback for a given item is provided after a delay, which enhances learning.15 Amplifire’s AI optimizes this delay by considering information collected about the learner (e.g., their estimated ability), the content being learned (e.g., the item’s estimated difficulty), and the learner’s response to that particular item (e.g., how long the learner spent reading the prompt). The corrective feedback takes the form of elaborative explanation16 and, when appropriate, worked examples 17. The rationale behind the correct response is provided and the error the learner made is explained (e.g., miscalculation, faulty knowledge, etc.).

Amplifire does not provide corrective feedback after full-confidence correct responses because doing so does not improve retention. 18 Learners’ time is therefore better spent on more productive activities.19 Corrective feedback is, however, provided after partial-confidence correct responses,20 and is especially powerful incases of confidently held misinformation.21

For problems or conceptual questions on which learners were not both fully confident and correct, Amplifire repeatedly tests the learner until its AI has determined that they have reached a mastery state. These repeated attempts profoundly improve the learner’s long-term retention of the material.22 Amplifire’s AI considers learner, content, and response data in order to determine the optimal delay between successive attempts on a concept. This delay harnesses the spacing effect, which is the finding that distributing learning over time is more effective than massing it together.23

Amplifire targets the point in the learner’s forgetting curve where a retrieval attempt is difficult but not impossible.24, 25

Altogether, Amplifire leverages AI and cognitive science to optimize the learner’s time spent mastering the material, promote long-term retention and transfer to related tasks, and maintain learner engagement.

Application and Efficacy in Healthcare

Amplifire has partnered with career-focused online universities, GED providers, and other educational institutions that support non-traditional and underserved student populations. More recently, Amplifire has expanded into healthcare training and formed the Healthcare Alliance.

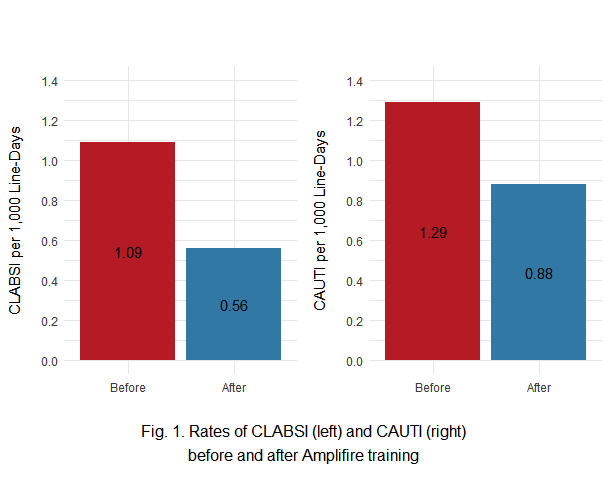

Medical errors are responsible for more than 250,000 fatalities in the United States annually, making them the third-leading cause of death.26 More than half of all medical errors are attributed to the “cognitive failures” of healthcare professionals.27 Amplifire was used at a large healthcare system to combat the cognitive failures that contribute to two hospital-acquired infections: CLABSI and CAUTI. The healthcare system made no other changes to policies, training, or available resources during this period; all effects were attributed to Amplifire.

Central-Line-Associated Bloodstream Infections (CLABSI)

A central line is a thin tube (catheter) placed into a large vein. Central lines are used to administer nutrition or medication (e.g., drugs for chemotherapy), and to monitor central blood pressure during acute care. When a healthcare provider inadvertently contaminates the equipment or the insertion site, the patient can develop a central-line associated bloodstream infection (CLABSI). The incidence of CLABSI is expressed in terms of the number of infections caused for every 1,000 days that patients had central lines (“CLABSI per 1,000 line-days”).

All central-line-attending nurses at a large healthcare system (N = 3,712) were trained in Amplifire. The results are displayed in the left panel of Fig. 1. In the 28 months before training, there were 1.09 CLABSI per 1,000 line-days. In the seven months after training, there were 0.56 CLABSI per 1,000 line-days—a reduction of 48%. An exact Poisson test indicated a statistically significant reduction in the CLABSI rate after training: p = .00014. Given CLABSI’s mortality rate of 25%, this reduction should save approximately 13 lives per year at this health system.28

Catheter- Associated Urinary-Tract Infections (CAUTI)

A urinary catheter is a thin tube inserted into the bladder via the urethra. An indwelling catheter remains in the urethra and bladder for continuous drainage of urine and monitoring of urine output during acute care. As with central lines, healthcare workers’ mistakes can contaminate the catheter and cause a catheter-associated urinary tract infection (CAUTI). Similar to CLABSI, the incidence of CAUTI is expressed in terms of the number of infections caused for every 1,000 days that patients were catheterized (“CAUTI per 1,000 catheter-days”).

Urinary-catheter-attending nurses (N = 4,512) at the same healthcare system were trained in Amplifire. The results are displayed in the right panel of Fig. 1. In the 28 months before training, there were 1.29 CAUTI per 1,000 catheter-days. In the seven months after training, there were 0.88 CAUTI per 1,000 catheter-days—a reduction of 32%. An exact Poisson test indicated a statistically significant reduction in the CAUTI rate after training: p = .01363.

Although both CLABSI and CAUTI were reliably reduced, the smaller magnitude of the CAUTI reduction may be attributable to two factors. First, only nurses interact with central lines, but both nurses and technicians interact with urinary catheters; part of the caregiver population was not trained on CAUTI. Second, the CAUTI course did not employ any multimedia.29 A revised and improved CAUTI course will be distributed to both nurses and technicians in the coming months.

Amplifire relies on principles of cognitive science. By allowing AI to determine how best to leverage many of those principles in real time, Amplifire delivers individually optimized learning in a wide variety of domains (learn more about our role in healthcare, aviation, and higher education). Its test-focused approach improves learners’ ability to retrieve information from memory. Its emphasis on confidence creates an additional dimension of learner introspection and understanding. Its multiple types of scaffolded feedback ensure that difficulty, engagement, and remediation are managed effectively, while also supporting metacognition and self-regulation. Amplifire’s ability to substantially reduce medical error demonstrates the power of cognitive science working hand in hand with AI.

References

1. Kapros, E., Koutsombogera, M. (eds.): Designing for the User Experience in Learning Systems. HCIS, pp. 1–11. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-94794-5

2. Gertner, A., Conati, C., VanLehn, K.: Procedural help in Andes: generating hints using a Bayesian network student model. In: Proceedings of the Fifteenth National Conference on Artificial Intelligence, AAAI, vol. 98, pp. 106–111. The MIT Press, Cambridge (1998)

3. Falmagne, J.-C., Cosyn, E., Doignon, J.-P., Thiéry, N.: The assessment of knowledge, in theory and in practice. In: Missaoui, R., Schmidt, J. (eds.) ICFCA 2006. LNCS (LNAI), vol. 3874, pp. 61–79. Springer, Heidelberg (2006). https://doi.org/10.1007/11671404_4

4. Olney, A.M., et al.: Guru: a computer tutor that models expert human tutors. In: Cerri, S.A., Clancey, W.J., Papadourakis, G., Panourgia, K. (eds.) ITS 2012. LNCS, vol. 7315, pp. 256–261. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-30950-2_32

5. Bjork, R.: Memory and metamemory considerations in the training of human beings. In: Metcalfe, J., Shimamura, A. (eds.) Metacognition: Knowing About Knowing, pp. 185–205. MIT Press, Cambridge (1994)

6. Roediger, H., Karpicke, J.: Test-enhanced learning: taking memory tests improves long-term retention. Psychol. Sci. 17, 249–255 (2006)

7. Bhatnagar, S., Lasry, N., Desmarais, M., Charles, E.: DALITE: asynchronous peer instruction for MOOCs. In: Verbert, K., Sharples, M., Klobučar, T. (eds.) EC-TEL 2016. LNCS, vol. 9891, pp. 505–508. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-45153-4_50

8. Bransford, J., Schwartz, D.: Rethinking transfer: a simple proposal with multiple implications. In: Iran-Nejad, A., Pearson, P. (eds.) Review of Research in Education, vol. 24, pp. 61–100. American Educational Research Association, Washington, DC (1999)

9. Hays, M., Kornell, N., Bjork, R.: When and why a failed test potentiates the effectiveness of subsequent study. J. Exp. Psychol. Learn. Mem. Cogn. 39, 290–296 (2013)

10. Pashler, H., Rohrer, D., Cepeda, N., Carpenter, S.: Enhancing learning and retarding forgetting: choices and consequences. Psychon. Bull. Rev. 14, 187–193 (2007)

11. Bruno, J.: Using MCW-APM test scoring to evaluate economics curricula. J. Econ. Educ. 20 (1), 5–22 (1989)

12. Soderstrom, N., Clark, C., Halamish, V., Bjork, E.: Judgments of learning as memory modifiers. J. Exp. Psychol. Learn. Mem. Cogn. 41, 553–558 (2015)

13. Sparck, E., Bjork, E., Bjork, R.: On the learning benefits of confidence-weighted testing. In: Cognitive Research: Principles and Implications, vol. 1 (2016)

14. Azevedo, R.: Computer environments as metacognitive tools for enhancing learning. Educ. Psychol. 40, 193–197 (2010)

15. Butler, A., Karpicke, J., Roediger, H.: The effect of type and timing of feedback on learning from multiple-choice tests. J. Exp. Psychol. Appl. 13, 273–281 (2007)

16. Shute, V., Hansen, E., Almond, R.: An Assessment for Learning System Called ACED: Designing for Learning Effectiveness and Accessibility. ETS Research Report Series, pp. 1–45 (2007)

17. McLaren, B.M., van Gog, T., Ganoe, C., Yaron, D., Karabinos, M.: Worked examples are more efficient for learning than high-assistance instructional software. In: Conati, C., Heffernan, N., Mitrovic, A., Verdejo, M.F. (eds.) AIED 2015. LNCS (LNAI), vol. 9112, pp. 710–713. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-19773-9_98

18. Pashler, H., Cepeda, N., Wixted, J., Rohrer, D.: When does feedback facilitate learning of words? J. Exp. Psychol. Learn. Mem. Cogn. 31, 3–8 (2005)

19. Hays, M., Kornell, N., Bjork, R.: The costs and benefits of providing feedback during learning. Psychonomic Bulletin and Review, vol. 17, pp. 797-801 (2010).

20. Butler, A.; Karpicke, J., Roediger, H.: Corrective a metacognitive error: Feedback in-creases retention of low-confidence correct responses. Journal of Experimental Psychology: Learning, Memory, and Cognition, vol. 34, pp. 918-928 (2008).

21. Butterfield, B., Metcalfe, J.: Errors committed with high confidence are hyper-corrected. Journal of Experimental Psychology: Learning, Memory, and Cognition, vol. 27, pp. 1491-1494 (2001).

22. Karpicke, J., Roediger, H.: Repeated retrieval during learning is the key to long-term retention. Journal of Memory and Language, vol. 57, pp. 151-162 (2007).

23. Cepeda, N., Pashler, H., Vul, E., Wixted, J., Rohrer, D.: Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychological Bulletin, vol. 132, pp. 354-380 (2006).

24. Landauer, T., Bjork, R.: Optimal rehearsal patterns and name learning. In: Gruneberg, M., Morris, P., Sykes, R., Practical aspects of memory, pp. 625-632. Academic Press, Lon-don (1978).

25. Hays, M., Darrell, J., Smith, C.: The forgetting curve(s) of 710,870 real-world learners. Poster presented at the American Psychological Association 124th Annual Convention, Denver, CO, USA (2016).

26. Makary, M., Daniel M.: Medical error—the third leading cause of death in the US, BMJ, vol. 353, pp. 2139 (2016).

27. Joint Commission: Patient safety. Joint Commission Online. (2015). https://www.jointcommission.org/issues/article.aspx? Article=jjLkoItVZhkxEyGe4AT5NDyAZaTPkWXc50Ic3pERKGw%3D, last accessed 2019/02/08.

28. CDC: Vital Signs: Central Line–Associated Blood Stream Infections — United States, 2001, 2008, and 2009. In: Morbidity and Mortality Weekly Report (MMWR), vol. 60, pp. 1-6 (2011).

29. Mayer, R.: Using multimedia for e-learning. Journal of Computer Assisted Learning, vol. 33, pp. 403-423 (2017). -

The Testing Effect: Putting Mom to The Test

Remember those flashcards mom made you cycle through every morning while she drove you to school? Times tables, vocab words. Then she’d start quizzing you on your spelling words. As much as you might hate to admit it, these methods worked. You remembered the information she was trying to pound into your head. But why did it work? The answer: the testing effect.

The testing effect has been given many names over the years—active recall, retrieval practice—but it all refers to the same mechanism: asking your brain to remember and retrieve information on cue. And, as Henry Roediger and Jeffrey Karpicke put it, “Testing has a powerful effect on long-term retention.”

Some might say, “Flashcards are a lot of work. What about just re-reading? That’s got to be as good as testing, right?” Wrong. Re-reading material might make you better at reading the test questions, but making yourself pull the information out of your brain makes you better at answering the questions.

Studies have compared students who prepared for an exam by testing themselves against students who prepared by reading. The result: the pre-testing students received higher scores on the exam than the reading students. Furthermore, the success of testing is linked to timing. The power of the testing effect increases as more time passes between the practice test and the actual exam.

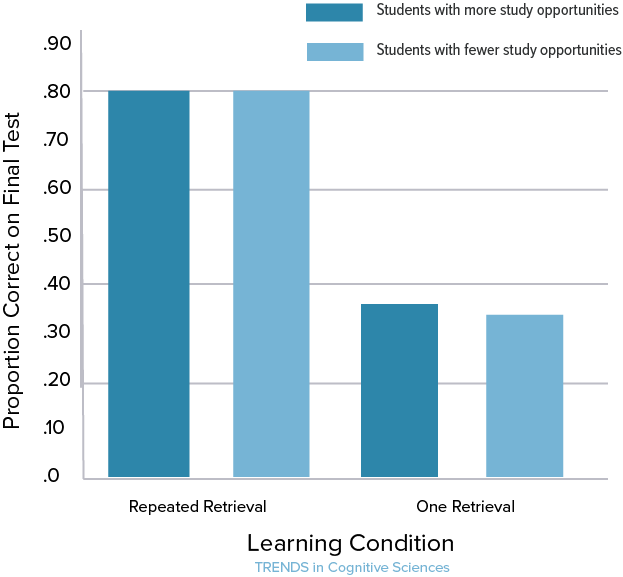

Research has also shown that multiple practice tests can further improve retention and test performance. Repeatedly asking your brain to dig around and produce sought-after information creates stronger connections and retrieval pathways in the brain. In one study, students who repeatedly practiced retrieval (testing) doubled their proportion of correct responses on the final test compared to students who only practiced retrieval once.

So what can we learn from the testing effect? Not only are quizzes the way to go when we want to remember information, but also—at least when it comes to flashcards and spelling quizzes—mom was right.

-

Fact or Fiction: Learning Styles Lead to Better Learning

Have you ever been pushed into group work even though it wasn’t necessary for the task? Or build a 3-D model of your department’s workflow? Such activities may trigger a learner’s perceived learning preferences—auditory, visual, verbal, interpersonal, kinesthetic (tactile/physical)–but don’t result in better recall of the learning information. Educators and education institutions have long believed that learning styles not only matter but are the key to effective learning. Many educators have been encouraged to create learning experiences based on these “styles” to improve learning outcomes. But, do such experiences actually result in better learning? And, do these learning styles help instructors understand how learning actually happens?

An individual’s learning style can be determined by a learning inventory questionnaire, typically VARK or Index of Learning Styles. Instructors use the insights from these questionnaires to tailor their instruction to meet the perceived learning styles of their audience. While this knowledge is interesting, a learner’s perceived learning style should not be the sole determining factor for instructional methodology or keep educators from teaching in a manner best suited to their content. Learning styles should be viewed as learner preferences, not stand-alone requirements for learning.

Many learners believe they can only learn via a specific style. For example, after taking the questionnaire, a learner is told she is an auditory learner and should listen to lectures in order to learn the information well. Another learner believes he is a visual learner and says, “I can only learn if there are videos.” A test went well for another learner because he used hand-made flashcards, so he believes he is a verbal learner. Yet another learner reviewed for an exam by building models of molecules out of pipe cleaners and cotton balls, so he believes he is a kinesthetic learner. However, years of research calls these claims into question. In a study published in 2017 researchers found that there is actually no association with performance and a learner’s believed learning style, or rather preference. In fact, this persistent belief in learning styles is due to a type of cognitive bias known as confirmation bias: a learner believes something to be true, and when the belief is confirmed by observation, she neglects contrary evidence.

Harold Pashler, Mark McDaniel, Doug Rohrer, and Robert Bjork published work in 2009 that concluded, “there is no adequate evidence base to justify incorporating learning-styles assessments into general educational practice” (2009).

However, learning styles shouldn’t be disregarded entirely. Instead, they should be tied to the content rather than the learner. In other words, a play should not be just read, but performed. Art is to be seen and experienced. Anatomy and physics should be practiced in a laboratory. The incorporation of different learning styles when aligned with the content likely will increase engagement from the learner. Additionally, educators must understand what is going on in the brains of their learners in order to make their instruction more effective.

The brain does not work in isolation: visual cues aren’t interpreted separate from auditory cues. Signals from all senses are transmitted simultaneously into neural pathways where memories are formed. Learning occurs when these memories are retrieved. Memories are made after associations (emotional, physical, etc.) have been formed and the act of retrieval has been practiced. Forgetting (when the brain is unsuccessful in retrieving the information it is looking for) may seem like a bad thing, but is a powerful force that encourages deeper memory formation.

So what should educators do? Create learning experiences based on the content and utilize proven methodologies that foster long-term learning: metacognition, retrieval, feedback, direct instruction, and application. The delivery of content should consider how to maximize learner engagement, which leads to increased curiosity and in turn, memory formation, while remaining focused on the objective. Know your audience, but also know the science of cognition. Deliver instruction that cues the neural pathways that create long-term connections within the brain, sparking memory-formation and a thirst for more.

-

Forgetting Is Good for You

Matthew Jensen Hays, Ph.D.

Senior Director of Research and AnalyticsWe all complain about how lousy our memories are. But forgetting is one of the most important tasks your brain undertakes. In fact, your brain intentionally and immediately discards almost everything you experience. Sounds persist for a few seconds before they are irretrievably lost. Images are trashed after at most a half-second. Try it yourself: Close your eyes and count the letters in this sentence.

Even when something survives all of these filters, it usually vanishes soon after. How many times have you been introduced to someone only to have no idea what their name is after a minute or two?

What’s going on here? Why is your brain so intent on throwing away everything you work to learn?

A popular myth is that you’ve got limited space and your brain is aggressively trying to make room for new information. In reality, anything you’ve stored lasts nearly forever. You just lose access to it—which is why you have an “ohhh yeah” moment when you find your keys. Of course that’s where you put them. The information was in there. You just couldn’t access it.

It turns out that forgetting—in the form of this loss of access—is an adaptation. Consider the horror of perfect memory: You’d remember with perfect clarity every time you made a mistake, and every time someone was rude to you, and every time you hurt someone’s feelings. You’d call to mind every phone number—yours and others’—equally well. You’d vividly recall every time you bit your tongue, and every time you inhaled. You’d go insane.

So how do we remember anything?

Researchers in the psychological sciences have catalogued dozens of triggers that cause your brain to hold onto information. An example is when you encounter something repeatedly, especially if those encounters are spread out in time. Your brain expects it to come up again in the future, and so information about it is preserved. This “spacing effect” works on such a fundamental neurological level that it is even found in the not-quite-brains of sea slugs!

Another trigger is the retrieval of information from in your brain. People who prepare for a test by calling the material up from memory get good at bringing it up from memory. People who prepare for a test by re-reading the material get good at reading the test questions, but not knowing the answers.

These triggers operate on mechanisms beyond your conscious control. We’ve all thought “Jeez I really need to remember this” only to have it suffer the same fate as almost everything else. You may think something is important, but your brain doesn’t care what you think.

Instead, you need to use the triggers to get your brain to agree with you that something is important. For remembering someone’s name, you can test yourself under your breath. For more complex materials, you may want to rely on software that incorporates research on these cognitive triggers.

And the next time you blank on a movie star’s name—but still recall the entire theme song to a cartoon you haven’t seen since you were six-going-on-seven—remember that these are side effects of your brain generally taking pretty good care of you.

-

Learning That’s Gamified

All games use powerful triggers that cause learning, long term memory, and motivation. This is true of board games, video games (which now generate more revenue than movies), and sports. You can think of triggers as being out here in the real world in the form of things like people, nature, books, videos, and techniques in software. Certain triggers cause specific brain circuits to switch on, causing learning and long term memory so we can then remember what we’ve learned at a future point in time. Triggers are the cause, and learning is the effect. Some of the most effective triggers work through emotion and attention—two hallmarks of games.

These are the six gaming triggers built into the Amplifire algorithm:

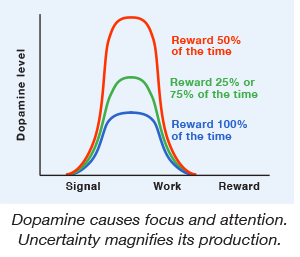

Uncertainty propels game players forward because they don’t know exactly what is coming next. Uncertainty triggers curiosity, a mental state seen at right in a set of fascinating experimental results. Attention producing levels of dopamine skyrocket whenever a reward has a 50% likelihood of occurring (top curve). As Robert Sapolsky notes, “You have introduced the word maybe into the equation and that is reinforcing like nothing else on earth.” Dopamine and attention levels fall from this peak when the reward becomes predictable.

In Amplifire, the perceived reward is closing an information gap. First, you make a bet on your knowledge with the proposition that asks, “How sure are you?” That question stimulates uncertain expectations of reward—will you close the information gap? Maybe. Second, as you progress, Amplifire withdraws material that has been mastered. What’s left is increasingly harder material. This maintains high uncertainty and focused attention as you move through a module.

Feedback can boost learning by 500% when compared to non-feedback learning. In Amplifire, learners receive explanations about both correct and incorrect answers. This detailed elaboration strengthens both information storage and retrieval processes in the brain. Second, the review page shows learners precisely how they progressed through a module, from typically high levels of misinformation and doubt, to mastery of the material.

Confidence triggers a massive number of switches that affect learning. Making judgments of learning means storage and retrieval processes are activated. Asking “are you sure” results in metacognition (thinking about thinking) and causes both top-down attention and bottom-up salience. Confidence also spurs attention because it is correlated with social status—one of the most sought after personal qualities in the human experience.

Progress motivates future activity through the buoyant feeling that comes from reaching your goals. Amplifire adapts to each learner’s level of mastery so that the material is appropriately difficult, but not so hard that motivation suffers. This ensures a learning experience in a gratifying emotional state that the learner is likely to want to repeat, and repetition is a key cognitive trigger for durable memory.

Misinformation is a unique feature of Amplifire that makes clear the possibility that confidently held, but wrong information may lead to error, injury, or embarrassment sometime in the future. That emotionally alarming possibility, when revealed in Amplifire, focuses your attention on the learning so you can avoid that outcome.

A Note on Leaderboards: They are motivating in games, but create a dangerous, dispiriting risk in an educational setting because people can feel their core intelligence being judged and ranked. We believe that all people can learn enormous amounts of useful information. For some, it merely takes more time.

-

Neurons That Learn

Your brain is not a computer. It may perform computations, but it’s nothing like the device that carries out spreadsheet calculations. Here’s a way to be completely sure of that contention: Imagine a friend shows you a picture of Abraham Lincoln and asks you to identify the person in the photo. Assuming you are an American, within a half second you have the answer. Because the neurons of the brain transmit signals rather slowly, we can calculate that you came up with Abe in about 100 discrete steps.

Give a super-computer that same problem and it will move thousands of times faster, yet it will take trillions of steps to search it’s database and finally return the answer (if it can). If we next cover up half of Abe’s face and ask you who it is, your response is faster still. Retrieving that information was quick and effortless. The computer, on the other hand, is now in big trouble. Nowhere in it’s prodigious database is there a half-picture of Abe Lincoln. Even at trillions of calculations a second, it can’t find a match. Somehow your brain can find Abe in 100 steps or less, and the computer can’t do it with trillions. Brains are not computers. They don’t need programming. They learn by themselves through exposure to the world.

Although both are capable of performing simultaneous operations (parallel processing), brains operate using an interconnected patterns of neurons—the cells that make up the brain—and they transmit, store, and fetch information using patterns that are formed through an electrical and chemical connection—the junction between neurons called the synapse. This paper focuses on the neuron as the fundamental unit of leaning and memory. As Joseph LeDoux asserts in “The Synaptic Self”: “The particular patterns of synaptic connections in an individual’s brain, and the information encoded by these connections, are the keys to who that person is.”

The particular patterns of synaptic connections in an individual’s brain, and the information encoded by these connections, are the keys to who that person is.

Consilience

In 1998, the Harvard biologist E.O. Wilson wrote “Consilience: The Unity of Knowledge” in which he argued that, eventually, the sciences would link up to form a rather beautiful and coherent description of reality. Wilson demonstrated that this vision had already taken shape in three areas of science— physics, chemistry, and biology. In physics, the interior descriptions of atoms and the fundamental forces of nature link one level up with the descriptions of electrons that form atomic bonds and give us chemistry. Chemistry then hands off to biology in the discipline of molecular biology. Biology heads further upwards with descriptions of reality at many consilient and interdependent levels: genes > cells > organs > organisms > species > ecosystems, and all those levels within biology are consiliently held together by Darwinian evolution. Wilson went on to show that the topmost levels of biology should someday smartly and smoothly hook up with sociology, anthropology, economics, political science, the humanities, art, culture, and religion—a chain of description and explanation.

Sociology and the study of human social dynamics is also impacted by the study of the brain. We now know that many of the human social instincts are genetically wired into the brain at birth and form a kind of implicit memory of how to behave during interpersonal circumstances ranging from dinner with friends to negotiating a lease or buying a car.

Clearly though, not everything is wired into the structures of the mind. While there may be hard coded rules of syntax embodied in human brain structure, no one is born with english or swahili in a ready-made package of wired-together neurons. Learning and memory are needed so that people can deal effectively with the speci cs of their circumstances—the correct usage of nouns and verbs, along with the particular rules and expectations of family, friends, and culture. All this activity takes place in brains so it’s perhaps not surprising that a growing understanding of the mind’s inner workings should help unify our understanding of social phenomenon once thought irreconcilable. The tribal behaviors of New Guinean highlanders and the activity of Wall Street investment bankers can now be seen in much the same light. They both share essentially the same structural components of a brain at birth, but the environment that those brains occupy and perceive will exert a profound influence that will form specific implicit and explicit knowledge about their worlds. They will become far different people, yet remain enigmatically the same.

Lastly, an unexpected area now informed by neuroscience is ethics. Perhaps the images that made the Apollo moon missions so memorable were less the shots of the approaching moon and more the photographs of receding planet Earth. Similarly, images of the brain, its connectivity and complexity, and its universality among human beings is helping philosophers to imagine new ways to conceive of morality—the ways in which we treat our fellow human beings, and even creatures that have similar nervous systems. We can now begin to describe the mental processes that allow nervous systems to feel joy and sorrow when wired up properly and feel nothing whatsoever when the internal connections are missing or damaged. A new breed of philosopher is beginning to apply these discoveries to the problems of morality and ethics, a province that has heretofore been walled off from science—what Steven Jay Gould called “non-overlapping magisteria.” Neuroscience is helping those magisteria to finally overlap a bit.

Your brain is not a computer. It may perform computations, but it’s nothing like the device that carries out spreadsheet calculations. Here’s a way to be completely sure of that contention: Imagine a friend shows you a picture of Abraham Lincoln and asks you to identify the person in the photo. Assuming you are an American, within a half second you have the answer. Because the neurons of the brain transmit signals rather slowly, we can calculate that you came up with Abe in about 100 discrete steps.

Give a super-computer that same problem and it will move thousands of times faster, yet it will take trillions of steps to search it’s database and finally return the answer (if it can). If we next cover up half of Abe’s face and ask you who it is, your response is faster still. Retrieving that information was quick and effortless. The computer, on the other hand, is now in big trouble. Nowhere in it’s prodigious database is there a half-picture of Abe Lincoln. Even at trillions of calculations a second, it can’t find a match. Somehow your brain can find Abe in 100 steps or less, and the computer can’t do it with trillions. Brains are not computers. They don’t need programming. They learn by themselves through exposure to the world.

Although both are capable of performing simultaneous operations (parallel processing), brains operate using an interconnected patterns of neurons—the cells that make up the brain—and they transmit, store, and fetch information using patterns that are formed through an electrical and chemical connection—the junction between neurons called the synapse. This paper focuses on the neuron as the fundamental unit of leaning and memory. As Joseph LeDoux asserts in “The Synaptic Self”: “The particular patterns of synaptic connections in an individual’s brain, and the information encoded by these connections, are the keys to who that person is.”

The particular patterns of synaptic connections in an individual’s brain, and the information encoded by these connections, are the keys to who that person is.

Consilience

In 1998, the Harvard biologist E.O. Wilson wrote “Consilience: The Unity of Knowledge” in which he argued that, eventually, the sciences would link up to form a rather beautiful and coherent description of reality. Wilson demonstrated that this vision had already taken shape in three areas of science— physics, chemistry, and biology. In physics, the interior descriptions of atoms and the fundamental forces of nature link one level up with the descriptions of electrons that form atomic bonds and give us chemistry. Chemistry then hands off to biology in the discipline of molecular biology. Biology heads further upwards with descriptions of reality at many consilient and interdependent levels: genes > cells > organs > organisms > species > ecosystems, and all those levels within biology are consiliently held together by Darwinian evolution. Wilson went on to show that the topmost levels of biology should someday smartly and smoothly hook up with sociology, anthropology, economics, political science, the humanities, art, culture, and religion—a chain of description and explanation.

Sociology and the study of human social dynamics is also impacted by the study of the brain. We now know that many of the human social instincts are genetically wired into the brain at birth and form a kind of implicit memory of how to behave during interpersonal circumstances ranging from dinner with friends to negotiating a lease or buying a car.

Clearly though, not everything is wired into the structures of the mind. While there may be hard coded rules of syntax embodied in human brain structure, no one is born with english or swahili in a ready-made package of wired-together neurons. Learning and memory are needed so that people can deal effectively with the speci cs of their circumstances—the correct usage of nouns and verbs, along with the particular rules and expectations of family, friends, and culture. All this activity takes place in brains so it’s perhaps not surprising that a growing understanding of the mind’s inner workings should help unify our understanding of social phenomenon once thought irreconcilable. The tribal behaviors of New Guinean highlanders and the activity of Wall Street investment bankers can now be seen in much the same light. They both share essentially the same structural components of a brain at birth, but the environment that those brains occupy and perceive will exert a profound influence that will form specific implicit and explicit knowledge about their worlds. They will become far different people, yet remain enigmatically the same.

Lastly, an unexpected area now informed by neuroscience is ethics. Perhaps the images that made the Apollo moon missions so memorable were less the shots of the approaching moon and more the photographs of receding planet Earth. Similarly, images of the brain, its connectivity and complexity, and its universality among human beings is helping philosophers to imagine new ways to conceive of morality—the ways in which we treat our fellow human beings, and even creatures that have similar nervous systems. We can now begin to describe the mental processes that allow nervous systems to feel joy and sorrow when wired up properly and feel nothing whatsoever when the internal connections are missing or damaged. A new breed of philosopher is beginning to apply these discoveries to the problems of morality and ethics, a province that has heretofore been walled off from science—what Steven Jay Gould called “non-overlapping magisteria.” Neuroscience is helping those magisteria to finally overlap a bit.