-

Amplifire Recognized as Software Advice FrontRunner in Employee Training

In exciting news from Software Advice’s (of Gartner Digital Markets) February 2022 FrontRunner Guide to Top Employee Training Software, Amplifire was recognized as a top-five product for the category. Software Advice uses reviews from real software users to highlight the top-rated Employee Training Software products in North America. Inclusion for the list is determined by verified unique user reviews, required functionality, and product relevance across industries or sectors.

Amplifire was scored based on functionality, ease-of-use, value for money, likelihood to recommend, and customer support. It ranks in the top quadrant as a result of customer satisfaction and usability scores, landing it among the top five-recommended products in the category. View the full report here.

As an adaptive learning solution, we know that personalized learning based on brain science is the best way to help individuals learn what they need and help organizations reach their goals. That’s why Amplifire is thrilled to be recognized as a leader in the employee training category based on end-user ratings.

Learn more about how Amplifire is helping organizations put the power of brain science to work.

FrontRunners constitute the subjective opinions of individual end-user reviews, ratings, and data applied against a documented methodology; they neither represent the views of, nor constitute an endorsement by, Software Advice or its affiliates.

-

Why Creating a Learning Culture is So Important for an Organization’s Success

It is becoming difficult to keep up with the nuances of workforce trends, from “The Great Resignation” to “The Great Reshuffling.” The point, however, is that workers are demanding attention to an underserved aspect of their professional lives: employee experience.

This is where the concept of “learning culture” can save the day. According to the Work Institute, the #1 reason employees left their job was due to a lack of professional development opportunities and support – costing businesses revenue and productive time. The solution to this enormous employment debacle is to put in the work to create and build a learning culture for your organization.

What is a “learning culture”?

You may hear the terms “reskill” and upskill” a lot these days, as the shifting constitution of the labor market is a hot topic. However, “learning culture” is more than simply the act of acquiring new skills – it’s more personal than that. A learning culture is an environment where employees are encouraged to seek, share, and apply new knowledge for the sake of personal and professional development.

But isn’t every culture a “learning culture” in a way? Not necessarily. To establish and maintain a learning culture, employees must have the support of their organization to pursue their goals. Learning & Development professionals understand the work that is involved to implement a unified culture at scale – from C-suite support to employee buy-in. We’ll cover some culture creation tips next.

How to create a learning culture

Research shows that employees yearn for opportunities to develop their skills – 94% of employees said that they would stay at a company longer if that company invested in their learning. That is why it is important to get leadership onboard and make the transition easy for employees.

Win C-suite support

Culture is not always a top priority for C-suite and leadership – much to the chagrin of L&D professionals. However, statistics support the need for continued learning opportunities for employees as part of an organization’s baseline. Employee turnover is costly. Moreover, a study by MIT Sloan School of Management revealed a 250% ROI after implementing a training program focused on soft skills in eight months. Present leadership with these facts. Support is more feasible with a healthy ROI.

Organizations that invest most heavily in culture/employee experience are found:

- 11.5x as often in Glassdoor’s Best Places to Work

- 4.4x as often in LinkedIn’s list of North America’s Most In-Demand Employers

- 2.1x as often on the Forbes list of the World’s Most Innovative Companies

- 2x as often in the American Customer Satisfaction Index

*Source: Jacob Morgan

Get employee buy-in

While employees crave learning and development opportunities, they also want it to be uncomplicated. Moreover, in a world where much of the workforce is still virtual or at least hybrid, a technical solution is necessary to power learning. With an adaptive learning solution that can support virtual and hybrid instruction, employees can easily embrace development.

5 Benefits of learning culture

Industry leaders recognize the benefits of learning culture as the fundamental ingredients for success:

- Attract and retain top talent – According to this survey, the top reason employees look elsewhere is due to the “inability to learn and grow” where they are. This same LinkedIn survey reveals learners at work are 48% more fulfilled in their role.

- Gain competitive advantage – In MIT and Deloitte’s most recent study, the most successful, fast-growing, digitally enabled companies have invested in the way individuals and organizations learn.

- Foster growth mindset – Continuous learning goes hand in hand with growth.

- Increase customer satisfaction – Happy, well-informed employees result in better served, more satisfied customers.

- Boost productivity – Satisfied workers are up to 20% more productive at work.

Again, these benefits are not just for the sake of it – they are essential for organizations’ survival and prosperity in the modern age.

_____________________________________________________________________

Amplifire’s goal is to support businesses looking to gain an edge in the modern age by offering an adaptive learning solution that supports employee learning, training, and growth. Learn more about how we can power learning culture at your organization.

-

Call center enjoys both faster onboarding and higher NPS

Call center enjoys both faster onboarding and higher NPS

This leading telecom is one of the largest wireless network operators in the US. With over 50 million customers, they prioritize customer service.

Employee turnover in call centers is high, running from 30% to 50% per year. New hires need rapid, effective training to efficiently solve customers’ problems, cultivate customer loyalty, and position customers with the optimal set of features for their needs. Extra minutes per call add up to enormous annual costs, and unsuccessful resolutions over the phone add more. In response, call centers closely measure operations to optimize results.

This telecom measured the effectiveness of Amplifire on new hire performance at two large customer call centers where agents took calls to handle questions about features, options, bandwidth, and billing. Using a control group to ensure meaningful before-after analysis, the improvements in new hire performance delivered a substantial ROI.

-

51% increase in student grades

Transform Their Learning Experience

A major challenge that universities and colleges face is student retention. This private university wanted to see if they could see students at risk and intervene before it was too late?

They reduced student failure by using Amplifire’s reporting dashboard, which shows instructors the knowledge gaps and struggle that most students experience during their time in higher education.

Then they used the principles from cognitive science built into Amplifire to make knowledge stick so students could become more successful in understanding the curriculum and pass their high-stakes exams.

-

A Bad Employee Or A Bad Week

Matthew J. Hays, PhD

Senior Director of Research and AnalyticsWhat’s Going On When A Learner Refuses To Learn?

On a humid summer evening, George Williams was clocked at 78 mph in a 35 mph zone. He ran four red lights as the police followed him. He finally stopped, leaped out of his car, and ran into a building.

What do you think of George so far?

A few steps into the building, he collapsed. Nurses swarmed him, applying compresses to the wounds they could see. After four hours of surgery and three months of physical therapy, George made a full recovery.

Now, what do you think of George?

What is The Fundamental Attribution Error?The fundamental attribution error (FAE) is when you assume that someone’s behavior is due to their nature rather than their circumstances. We all fall prey to it. The numbskull who cuts you off in traffic is clearly a terrible person.

Of course, when you’ve cut someone off in traffic, it’s been for a good reason. You were about to be late for a meeting, or someone was hurt, or one of your kids had a restroom emergency-type situation going on. You can excuse your own behavior, but there are no excuses for others. If someone’s acting like a jerk, they must be a jerk.

Full disclosure: I made this error for years. I run research and analytics for an online eLearning platform (Amplifire). In many other systems, you can just put a stapler on the spacebar, go get a coffee, and your training is done when you come back. But in ours, you have to actually master the material. We’ve developed sophisticated behavior analysis algorithms that try to identify when someone attempts to rush through Amplifire without learning. For the longest time, I have been referring to people who do this—who interact with the system in a disengaged or disingenuous manner—as “goofballs.”An example of goofballery: Responding “I don’t know yet” in less time than it could possibly take to read a question, over and over and over again. (We even have messaging that pops up and makes it clear that their strategy won’t work. One of the messages concludes by telling them, “Look, you might as well just learn.”)

But what I need to keep in mind is that someone who engages in goofballery isn’t necessarily a goofball. I learned this lesson quite acutely from one of our clients. Let’s call them Acme. They were using Amplifire in a several-week-long onboarding program for new call center agents.

Even before we had algorithmic behavior categorization, we had some idea of what a goofball looked like in our standard reports. A couple of Acme learners fit the bill perfectly. They rushed through questions but took longer to learn than average and struggled mightily to grasp even basic concepts…it was clear that something other than normal, engaged learning behavior was going on.

I’ve been a big fan of naming reports after the question they answer. Sometimes a report user wants a Learner Progress report, sure. But sometimes, they just want to know who isn’t done. So we ought to give them a “Who Isn’t Done?” report.

The goofballs at Acme made me want to make a “Who Should I Reprimand?” or “Who’s Screwing Around?” report. Maybe even a “Who Should I Fire?” report. It’s exactly the kind of insight that call center clients are looking for. The success metrics almost calculate themselves: cost savings from reducing training class size, plus improved call performance once the trainees become agents (since you’ve filtered out the ones who appeared not to care about the company or how to do well at it).

Cooler heads prevailed, and we instead went to Acme with a couple of names and a curious tone. We asked what they thought might be going on and let them follow up with the trainees. They came back to us with awe and thanks…but not for the reasons the FAE had led me to expect. Instead, one trainee was living in his car in the training center parking lot. You can imagine that that kind of stress would make you disengage from parts of your job you couldn’t tell were important. The other trainee’s father had just died. He was in no shape for a training program that week…but was able to pull it together the following week.

Who Needs Your Help?

My new name for the goofball report is “Who needs YOUR help?” These trainees needed the opposite of getting fired; they needed support. Our data identified that, but I fell victim to the fundamental attribution error. They weren’t bad employees. They were employees going through a bad time.

My team and I are trying to avoid the FAE in our report labels and data interpretations. I’m also adopting this in my personal life. When someone cuts me off in traffic, I wish them good luck.

Well, I try to, anyway. I’m working on it.

-

Ladder related incidents reduced by 31.5%

Ladder Accidents

Across all industries, the highest fatal and nonfatal ladder fall incidents (LFI) rates were in the following two occupational groups: construction and extraction (e.g., mining), followed by installation, maintenance, and repair occupations.

Every year, over 300 people die in ladder-related accidents, and thousands suffer disabling injuries. According to a report from Liberty Mutual, the direct compensation and medical treatments associated with these falls cost American businesses $4.6 billion a year.

Ladder Safety Training

For this telecom/cable service provider, technicians who experienced a ladder fall averaged 81.25 days of lost work. Their top executives employed Amplifire to reduce the number of incidents.

Amplifire worked with their L&D team to develop a Ladder Safety training course that focused on how to perform safety checks according to OSHA guidelines. The course was then rolled out to 862 supervisors. Each supervisor has 15 subordinates.

Confidently Held Misinformation

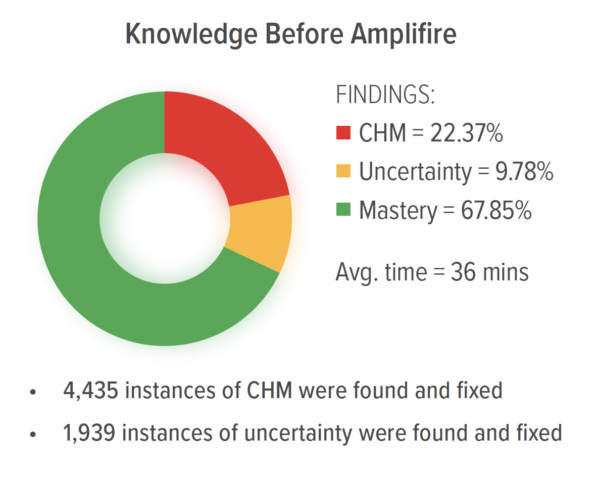

Confidently held misinformation (CHM) exists in all individuals and organizations. It is one of the largest contributors to costly errors.

CHM exists when an individual is sure they are right, but they are wrong. It creates misjudgments and mistakes. Misplaced confidence can be perilous—and result in adverse incidents.

Amplifire has the unique power to detect and correct CHM. The platform requires learners to state their certainty when they answer questions. The system then classifies which questions were answered confidently but incorrectly—representing confidently held misinformation—and customizes a module in real-time that will lead the learner to rapid mastery of the topic.

The cognitive science behind the platform has proven itself in over one billion learner interactions.

Knowledge Variation

The variation of knowledge among supervisors was high, with some supervisors quite misinformed and others showing confident mastery of the topic. Surprisingly, some of the more senior supervisors held more CHM than their counterparts.

Download the full case study

to see the training ROI realized by this telecom provider. -

Using True-False Questions to Promote Learning

Matthew J. Hays, PhD

Senior Director of Research and AnalyticsTests don’t just evaluate what you know. The act of being tested actually makes your memory stronger. Think about it. You want to improve your ability to retrieve information from your brain. What could be better practice than…retrieving information from your brain?

Unfortunately, tests get a bad rap – but some are perceived to be even worse than others. It seems like the harder it is to make a test and evaluate its responses, the better its reputation. Essay tests are supposedly the best, while everyone looks down on multiple-choice tests (even though they shouldn’t). True-false tests are at the very bottom of the heap.

But they don’t have to be.

In a series of studies published in July of 2020, researchers in the Bjork Learning and Forgetting Lab at UCLA have revealed how to construct true-false questions in a way that enhances learning beyond what previous true-false questions have been able to achieve.

For example, suppose you wanted someone to learn that Steamboat Geyser is the tallest geyser in Yellowstone Park. After having them read about geysers, you could use a true-false test to reinforce what they read. On that memory-enhancing test, you could use a true item: “Steamboat Geyser is the tallest geyser in Yellowstone Park, true or false?” You could also use a false item: “Castle Geyser is the tallest geyser in Yellowstone Park, true or false?”

These questions are somewhat limited in their instructional value. When an item is true, it helps people learn only about the topic of the true-false statement. For example, the Steamboat question above only reinforces that Steamboat is the tallest. When an item is false, however, it helps people learn only about information related to the true-false statement. For example, the Castle question above only reinforces that Steamboat is the tallest – but does nothing to enhance learning about Castle Geyser itself.

The Bjork lab’s discovery: Inserting a contrasting clause into the question activates more concepts in your brain. For example, you could make a true item with a contrasting clause like this: “Steamboat Geyser (not Castle Geyser) is the tallest geyser in Yellowstone Park, true or false?” You could also make a false contrasted item like this: “Castle Geyser (not Steamboat Geyser) is the tallest geyser in Yellowstone Park, true or false?” Both questions help reinforce information about both geysers.

We are building these findings into the Amplifire platform, our authoring system, and our content analytics. If you’d like to know more about the science or the software, reach out here!

-

Amplifire’s CEO Reflects on 2020: What This Year Has Taught Us

Bob Burgin

Amplifire CEO2020 – a challenging year for everyone. Enough said?

Well, maybe not. I have to say that it has been truly inspiring to witness our clients’ heroic response during this trying time. Health systems’ heroic and dedicated staff took significant personal risk to care for us. T-Mobile kept lines open while delivering superior customer care. Our higher education clients helped over one million students succeed in their efforts to learn remotely. Professionals across the country found the motivation to earn certifications as their career paths were at risk. More than anything else, we thank you. Your tenacity during hard times is inspiring.

As for Amplifire, well, I think it has been a good year. You see, the world never entirely goes back to what it was before a time like this. Something we have all known for years but struggled to create just advanced 10+ years in only nine months. It’s the notion that sophisticated adaptive online training done right significantly outperforms classroom training.

I wouldn’t wish this year on anyone, but sometimes out of terrible events comes powerful change. I suspect we will get on airplanes for business a little less often now that we have broken the self-consciousness barriers and learned to look into each others’ eyes on a web call. And we have a new pattern of staying connected with loved ones across the country and the world.

And I similarly believe we will not go back to putting people in classrooms and teaching to the lowest proficiency in the room. This is the promise of adaptivity—people get instruction tailored to their location and circumstances on the path to mastery. Some go fast, others take more time to absorb information, but everyone can become proficient.

In 2020, we expanded our client relationships and added new clients. And as the radical move to online adaptive training hit hard, the demand for our advanced capabilities grew, and we added a host of new reseller partners fully embracing this new way of learning.

It was a challenging year, without a doubt. In April, we were taking pay cuts to ensure our ability to keep our no-layoff promise to our employees during COVID. Like everyone, we had no idea what the future would hold.

We will continue our focus on advancing the art and science of Knowledge Engineering to drive improved performance. It’s never been truer than this present moment that we live and work in a knowledge economy where human flourishing derives from the information stored in our minds as long-term memory.

It turns out that the most powerful, complicated, amazing computing device is not in some university basement. Not calculating rocket trajectories at NASA or finding obscure info for Google. Although it designed all the computing devices ever used.

It’s the human mind.

And finding ways to load knowledge into the human mind, well, that’s our obsession.

As 2020 draws to a close, we wish you our best and share our hopes for an exhilarating 2021.

And a very special thank you to our team, who never blinked.

Bob Burgin

CEO -

Amplifire Secures Patent for Analytics Regarding the Confidence of Learners

BOULDER, Colo., Nov. 2, 2020 /PRNewswire/ — Amplifire, an eLearning company, announced today the award of an additional patent by the United States Patent Office. Amplifire holds patents issued in the US, EU, Australia, Canada, Japan, South Korea, and other countries and jurisdictions worldwide. The new patent is titled Display and Report Generation Platform for Testing Results.

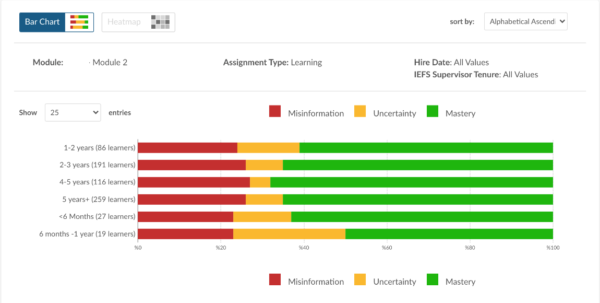

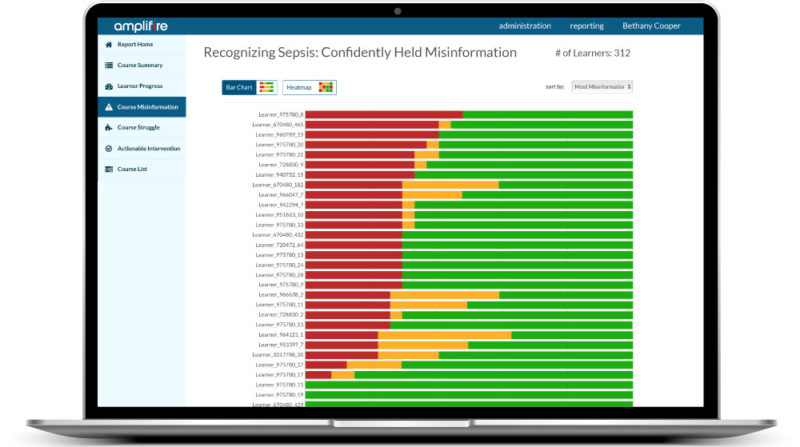

The new patent, US Patent No. 10,803,765, is directed to aspects of Amplifire’s learning platform, which includes unique Answer Key and Reporting Dashboard features. The Answer Key allows learners to signify both their confidence and answer choice in one click, which fosters greater metacognition. The Reporting Dashboard builds visual analytics displaying a learner’s misinformation, uncertainty, and struggle in bar charts and heatmaps. Search and sorting features allow managers or instructors to see their organization’s knowledge at any scale, from individual to team to division, and across the enterprise.

“Our customers view knowledge as a strategic way to compete.”

Confidence measures shown in the reporting dashboard are essential because confidence is the precursor to human behavior. It appears as internal thoughts such as, “I’ve got this,” or, “I haven’t a clue what to do.” The Amplifire dashboard reports and sorts using the confidence a learner displayed when they answered questions in the assessment phase of learning and subsequent refreshers. The most dangerous form of confidence occurs when a learner is sure but incorrect, referred to as confidently held misinformation, driving them towards a mistake.

The ability to see how confidence is bound to knowledge gives learning officers, administrators, and instructors a window into the risk of future mistakes in their workforce. Visualizing human fallibilities such as misinformation, uncertainty, and struggle lends unprecedented guidance to managers and instructors. For the first time, they can see the people who improve in the platform, where pockets of risk lie, and who needs at-the-elbow help.

Amplifire CEO, Bob Burgin, noted, “We are proud that the US Patent Office noticed our reporting dashboard’s unique features and awarded our efforts with a patent. Amplifire’s product development team regularly thinks up new ways to help people overcome the knowledge problems inherent in the human condition. Our customers view knowledge as a way to compete. They understand it’s strategically in their interest to help their people reach new levels of performance.”

About Amplifire

With over 2.4 billion learner interactions, Amplifire (www.amplifire.com) is the leading adaptive learning platform built from discoveries in brain science that help learners master information faster, retain knowledge longer, and perform their jobs better. It detects and corrects the knowledge gaps and misinformation that exist in the minds of all humans so they can better attain their real potential. Healthcare, education, and Fortune 500 companies use Amplifire’s patented learning algorithms, analytics, and diagnostics to drive exceptional outcomes with a significant return on their investment.

-

Self-Regulated Learning: Beliefs, Techniques, and Illusions

Authors

Robert A. Bjork,1 John Dunlosky,2 and Nate Kornell3

1Department of Psychology, University of California, Los Angeles, California 90095, 2Department of Psychology, Kent State University, Kent, Ohio 44242, 3Department of Psychology, Williams College, Williamstown, Massachusetts 01267

Abstract

Knowing how to manage one’s own learning has become increasingly important in recent years, as both the need and the opportunities for individuals to learn on their own outside of formal classroom settings have grown. During that same period, however, research on learning, memory, and metacognitive processes has provided evidence that people often have a faulty mental model of how they learn and remember, making them prone to both misassessing and mismanaging their own learning. After a discussion of what learners need to understand in order to become effective stewards of their own learning, we first review research on what people believe about how they learn and then review research on how people’s ongoing assessments of their own learning are influenced by current performance and the subjective sense of fluency. We conclude with a discussion of societal assumptions and attitudes that can be counterproductive in terms of individuals becoming maximally effective learners.

The Need for Self-Managed Learning

Our complex and rapidly changing world creates a need for self-initiated and self-managed learning. Knowing how to manage one’s own learning activities has become, in short, an important survival tool. In this review we summarize recent research on what people do and do not understand about the learning activities and processes that promote comprehension, retention, and transfer.

Importantly, recent research has revealed that there is in fact much that we, as learners, do not tend to know about how best to assess and manage our own learning. For reasons that are not entirely clear, our intuitions and introspections appear to be unreliable as a guide to how we should manage our own learning activities. One might expect that our intuitions and practices would be informed by what Bjork (2011) has called the “trials and errors of everyday living and learning,” but that appears not to be the case. Nor do customs and standard practices in training and education seem to be informed, at least reliably, by any such understanding.